Hello friends,

This week: Highlights from April’s Interintellect discussion of the many ways the word “risk” is misapplied to situations of not-knowing that aren’t formally risky (reproduced below), and more photos of rocks.

But first ...

Announcements 📢

Thinking about not-knowing #5: False advertising. We instinctively trust those who claim to tame not-knowing or protect us from it. The people and institutions who manage our money, govern our countries, and build our tools want and need our trust — so they often claim to have good methods for dealing with uncertainty. Maybe we should be more skeptical of these claims? Let’s talk about it in the upcoming 5th episode in the Interintellect series about not-knowing. Thursday, 18 May, 8-10PM CET; on Zoom and open to all. More information and tickets here. Drop me a line if you want to join but the ticket price is an insurmountable barrier — I'll sort you out.

Our totally absurd social media situation. Like so many other people, I’m poking around at too many different options. If you’re inclined, you can find me honing my bewilderment practice on …

Twitter: @vaughn_tan,

Farcaster: @vt,

Bluesky: @vaughn.bsky.social,

Instagram: @vaughn.tan (this is always going to be mostly photos of stuff I see literally on the street),

Naming risky situations

A few weeks ago, we met to discuss the many ways we (mis)use the word “risk.” (This was the fourth session in my Interintellect discussion series on not-knowing, and the session pre-reading was an essay titled “How to think more clearly about risk.”)

Participants again came from a wide range of backgrounds, including innovation consulting, programming, military strategic planning, in-house innovation strategy, and psychology: Colin R., Indy N., Mike W., Karen A., Kevin M., Travis K., Daniel O., Anne-Marie N., Chris B.

We began with two observations and the problem that results from them.

Observation 1: The word “risk” has a formal definition: A situation where the precise outcome is unknown, but all possible actions, outcomes, and probabilities of outcomes given actions are known. In other words, formal risk is when we know almost everything about what we don't know, such that the conditions for strict formal rationality are met. In a situation of formal risk, it's possible to make clear decisions about how to act using well-established methods (value in expectation, cost-benefit analysis, etc). These methods for decisionmaking under formal risk depend on both precise (= exact) and accurate (= correct) knowledge of probabilities.

Observation 2: Formal risk is rare in real life and is limited to stuff like betting on the outcome when flipping a fair coin or tossing a fair dice. This means that the word “risk” is used in a looser sense for describing many real-world situations of not-knowing which are not formally risky. This week’s pre-reading offers some examples of some of these situations of non-risk not-knowing. A cynical view is that this is intended to make these situations seem more knowable and manageable than they really are.

The resultant problem: The word “risk” is used to describe non-risk situations of not-knowing, so decisionmaking methods appropriate for formal risk are used in these situations. These methods depend on accurate and precise knowledge of probabilities. In situations of non-risk not-knowing, probabilities may be known precisely but almost never exactly. The emerging kerfuffle around Silicon Valley Bank (“poor risk management!”) is just one example of the consequences of misnaming the type of not-knowing we face. The reading offers some other examples of the consequences of applying risk mindset to decisionmaking in a non-risk situation.

Formal risk is rare in real life, so the word “risk” is misused to describe situations of not-knowing that aren't formally risky, and decisionmaking methods only suitable for formal risk are nonetheless applied to make decisions in those situations. These simply fail when applied to non-risk situations of not-knowing.

Outside of a handful of economists, the word “risk” is rarely understood and used in its formal definition — a situation where the precise outcome is unknown, but all possible actions, outcomes, and probabilities of outcomes given actions are known. Situations of formal risk practically never occur in real life.

There is a widespread informal understanding and usage of “risk” as some combination of partial knowledge and its (usually negative) consequences. However, different groups of people mean different and specific things when they use the word.

Some of these informal yet specific meanings of “risk” are:

A potential or imagined problem.

A set of known problems with known mitigations.

The probability of a specific outcome that is known to be bad — an outcome with a negative valence.

Generally, outcomes with negative valences (without probabilities attached to them).

A specific outcome that is bad for some people but not for others — an outcome with a subjectively negative valence.

The cost of not taking a particular mitigating action (i.e., the cost of inaction).

The negative unforeseen consequences of a given action.

Specific to the US military: A framework of domain-specific conceptions of potential threats (i.e., acquisition risk, program risk, inaction risk, risk to mission, risk to personnel, etc).

These informal definitions are in fact quite formal in the sense that each means something specific that is conceptually distinct from the others though potentially overlapping in practice. We came up with those 8 informal definitions among the 10 participants in the group, so there are probably many more out there. The wide range of informal definitions of “risk” reflects a commonplace understanding that the world is fuzzy-edged, ambiguous, and our knowledge of the future is highly imperfect.

Conventional risk mindset and its associated methodologies (cost-benefit analyses, expected value analyses etc) comes out of the formal definition of risk. Formal risk is definitionally precisely and accurately quantifiable, so risk mindset relies on precise and accurate probabilities of outcomes. (Important!: Precision and accuracy are not the same thing.)

Conventional risk mindset is comforting because it implicitly suggests that the situation is knowable and controllable in the sense that it allows for precise and accurate probabilities. But it only works as expected when precise and accurate probabilities are actually available — so risk mindset may be inappropriate for the vast majority of real world situations. Nonetheless, interpreting a situation as “risky” implicitly justifies using conventional risk mindset to deal with it. This is comforting even when risk mindset is inappropriate for the situation.

Some open questions:

Is it possible to communicate “riskiness” of a situation effectively when there are so many different and partially overlapping informal definitions of “risk”?

How should we make decisions when we know that our precise probability estimates are unlikely to be accurate and when we don't know how inaccurate they are?

What if there is no “real” probability? This is an epistemological question about whether all events must have a true probability or not.

In what situations are numerical probabilities impossible to estimate?

How should Bayesians deal with unknown situations with no priors? Is it truly the case that a randomly selected prior is better?

Some observations:

Estimates of an outcome's probability are often connected to the outcome's desirability, even when the outcome is produced by a mechanism with a stable and known distribution of outcome states.

Beliefs about the state of the world (e.g., “I believe the situation we are facing is one of formal risk” or “I believe that there is a 24-33% probability that my action will result in Skynet, and that my probability estimate is highly accurate”) are crucial underlying factors in decisionmaking which are rarely interrogated and made explicit.

Animals (black swans, grey rhinos, etc) might be a useful taxonomy of partial knowledge.

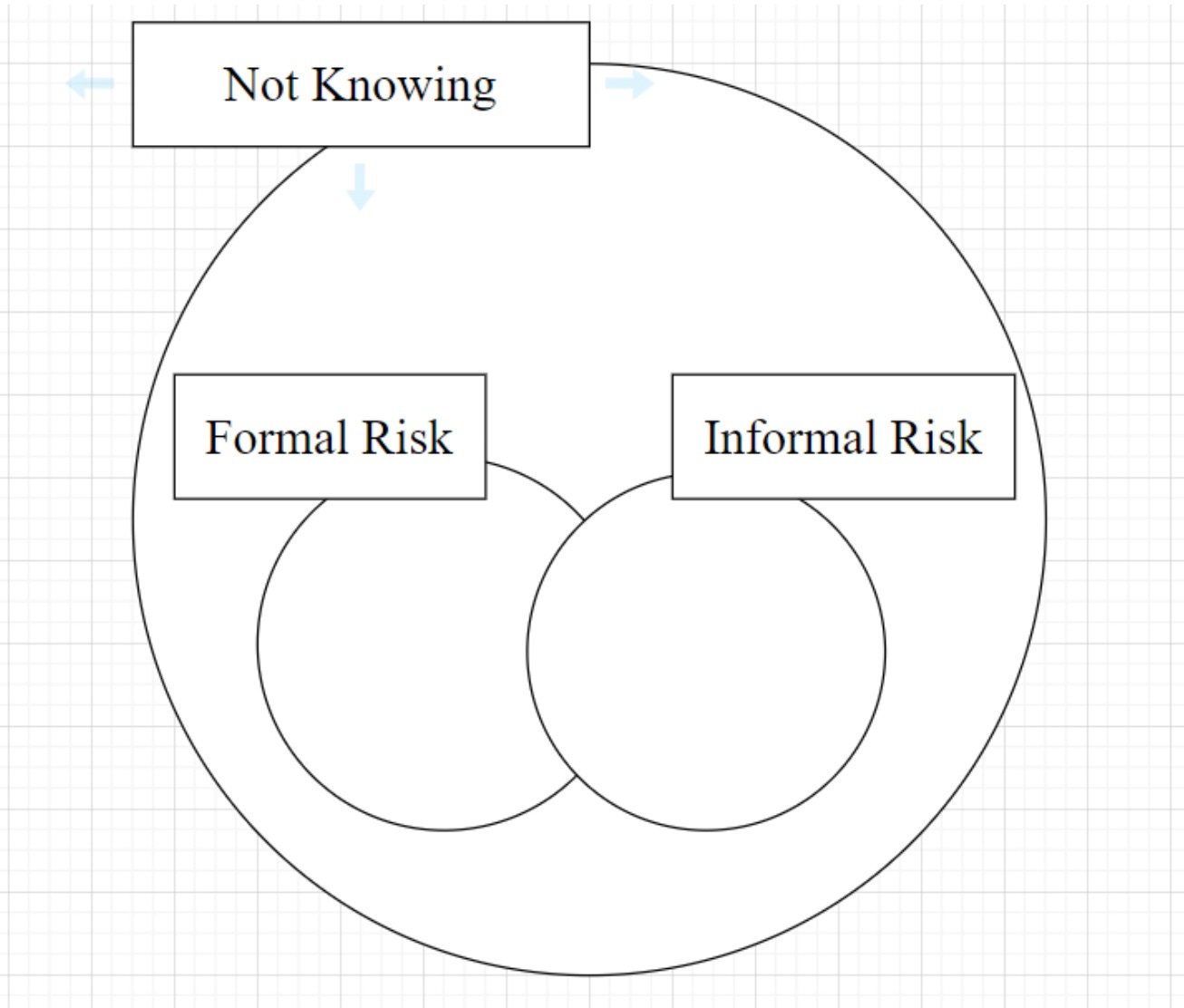

A Venn diagram of not-knowing, formal risk, Knightian uncertainty, and informal “risk” clarifies where these concepts do (and don’t) overlap. One participant contributed this quick sketch during the session:

I added to it to produce the diagram below (still a work in progress):

Next time, I’ll write a bit about the practice of iterating through visualisations of an emerging concept like not-knowing.

Other writing

I used to write papers using LaTeX, during which time I fell deep into the rabbithole of good digital type handling, and the separation of style and content. I have since let this practice lapse — about which I feel some regret and much relief.

Not-knowing is a concept that is still emerging. I am trounced every time I try and lay down something linear and determinate about it. But I now have a slowly growing collection of short essays on aspects of not-knowing; some coherence is beginning show up among them.

And, finally, what you’ve been waiting for:

More rocks

Also see the previous issue of this newsletter, which was about the appreciation of rocks.

See you next time,

VT

I do love those rocks!